The United States is a multicultural democracy. This is simply an empirical fact; the country is a democracy (imperfect by many important measures); and it is multiracial, multi-ethnic, and multi-national. So what is involved in helping bring about a transition to an “inclusive” multicultural democracy — a social and political order embodying fundamental equality across all groups, developing a political psychology of mutual respect for the dignity and freedoms of members of other groups, and creating an environment of reasonably harmonious social and political life across and within different communities?

Jack Citrin and David Sears address some of these questions in American Identity and the Politics of Multiculturalism (2014). The book is a notable contribution in many dimensions, but of special interest to the topic of inclusive democracy is their effort to gain empirical insight into the “ethnic” or group identities of the groups that make up our population, and some ideas about the social processes that contribute to the formation of those identities. Here is how they characterize social identities:

Social identities refer to the dimensions of one’s self-concept defined by perceptions of similarity with some people and difference from others. They develop because people categorize themselves and others as belonging to groups and pursue their goals through membership in these groups. They have political relevance because they channel feelings of mutuality, obligation, and antagonism, delineating the contours of one’s willingness to help others as well as the boundaries of support for policies allocating resources based on group membership. Indeed, the intimate connection between the personal and the social bases of self-regard becomes clear when one recalls how quickly an insult to the dignitity of one’s group can trigger ethnic violence. (Citrin and Sears 2014: 31)

A person’s social identity may depend on many different kinds of personal characteristics: religion (evangelical vs. Protestant vs. Muslim), gender (M, F, X), region (Midwestern vs. South vs. Long Island), or occupation (blue collar, white collar, service). But Citrin and Sears underline the particular importance of racial and ethnic affiliations in U.S. social and political life.

This approach views racial and ethnic minorities as having especially strong ethnic identities, a sense of common fate with fellow group members, and perceptions of discrimination against their own group. These psychological foundations, we suggest, underlie the normative precepts of identity politics and multiculturalist ideology, particularly resonating with its emphasis on privileging ethnicity as a primary social identity. (19)

And they draw special attention to the relatively unique features of African-American identity in the United States, which they refer to as “black exceptionalism”:

Third, the black exceptionalism model hypothesizes that African Americans have always faced a uniquely powerful color line, one that is not completely impermeable but that continues to be difficult to crack. Despite their linguistic assimilation and their significant and ongoing contributions to a common popular culture, many blacks are excluded by the legacy of the past from the level of integration into the mainstream that voluntary immigrant groups have undergone, and, we argue, are continuing to undergo. Indeed, of all the major ethnic and racial groups blacks have, on average, by far the strongest levels of aggrieved ethnic group consciousness. Young blacks are especially likely to have strong group consciousness, suggesting enduring obstacles to interethnic cooperation. (22)

The black exceptionalism perspective argues that African Americans remain subjectd to uniquely high levels of prejudice and discrimination. Key to this view is the notion of the inertial power of history. Even as laws change, fundamental social practices and the mentalities of ordinary people typically follow only slowly, as in the classic contrast of “stateways” with “folkways.” The residues of racial prejudice in the behavior and attitudes of ordinary Americans have persisted long after the Emancipation Proclamation and 1960s-era civil rights legislation eliminated formalized racial inequality from the law books. (35)

It is important to reflect on these last two points. The persistence of racial prejudice and discrimination in US society is evident. There is indeed a “uniquely powerful color line” in the US. Likewise, the consequent social, economic, and political disparities for African American individuals and families are well-documented, whether we consider health status, family wealth, or educational opportunities. Black exceptionalism is not simply a perspective; it is a well understood historical reality (though a reality that the Trump administration is working very hard to conceal). (Here is an earlier post on this topic.)

In this book, and the research supporting it, Citrin and Sears are primarily interested in a problem that is somewhat distinct from the question of white racial attitudes. They are interested in the question of “national identity” in the context of multiple “ethnic or racial identities”, which they regard as the key issue raised by multiculturalism. Does the fact that Alice identifies as an African American woman make her less likely to have a strong affinity with the nation as a whole? Is she less “patriotic” than a typical member of another ethnic group? Is there a process of “assimilation” through which local identities (“Polish-American”, “Cuban-American”) subside in favor of a composite “American” identity? (For that matter, is this part of the hysterical reaction offered to the Bad Bunny halftime show by the right?)

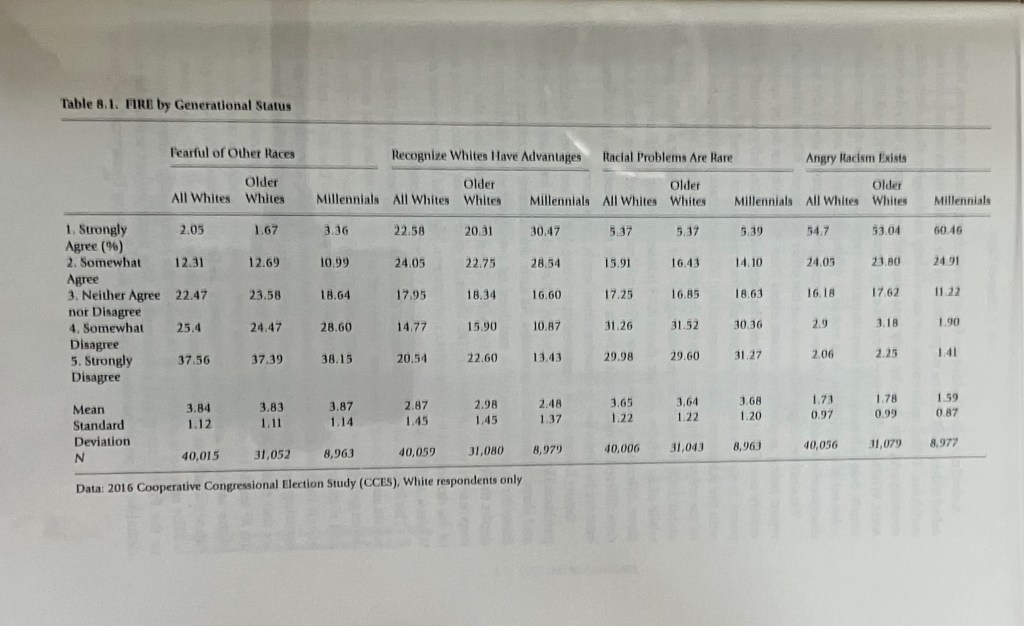

On this orientation, the question raised by American Identity and the Politics of Multiculturalism is whether specific ethnic and racial identities are compatible with an overarching “civic” or “national” identity. They consider three broad frameworks: cosmopolitan liberalism, soft multiculturalism, and hard multiculturalism. The question of whether levels of “racial and ethnic antagonism” have changed in various groups is secondary. In fact, the topic of generational change in racial attitudes — the question of whether Millennials are less racist than Boomers — is not addressed here directly at all. And yet Sears is himself one of the chief architects of the “symbolic racism” school of thought — the idea that the key change between generations has been the replacement of “old-fashioned biological racism” by a more “color-blind” racism that nonetheless perpetuates antagonism and fear by whites of African Americans.

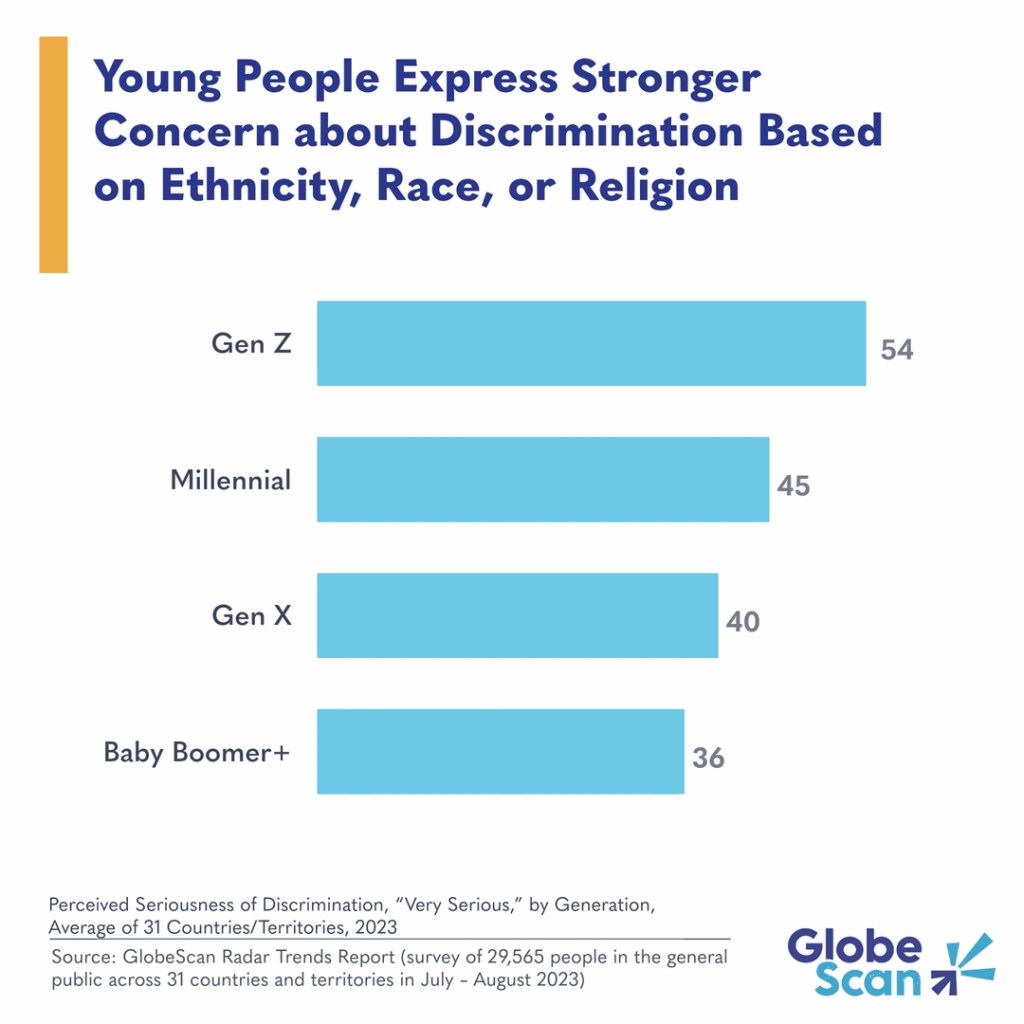

So how are we to move beyond this “uniquely powerful color line”? Racism is a reality that is conveyed through individual actions and institutional effects. Part of progressive change must take the form of change of attitudes and culture on the part of white people, and of young people in particular. So it is especially important to have empirical and sociological data about the evolution of white racial attitudes since 1950. How have white attitudes, beliefs, and stereotypes about African American people changed during these decades? Has there been generational change? The “impressionable years” hypothesis—which suggests that individuals are highly susceptible to attitude change during late adolescence and early adulthood, and then “crystallize” and remain stable for the rest of their lives—is a cornerstone of some theories of political socialization (Krosnick and Alwin, “Aging and susceptibility to attitude change”). Did the dramatic moments and struggles of the civil rights movement change the way that young white people thought about their black compatriots? (The revolution was televised!) Did the Obama presidency or the Black Lives Movement move the dial? Are we a less racist society today when it comes to attitudes, stereotypes, and expectations? The arguments offered by DeSante and Smith in Racial Stasis suggest — not very much (link). And Eduardo Bonilla-Silva (2022) fills in many of the blanks in Racism Without Racists: Color-Blind Racism and the Persistence of Racial Inequality in America. We need to have a new surge of practical thinking about how a more genuinely inclusive and respectful “culture of multiculturalism” will come about.